.jpg)

Te-Yen Wu

Assistant Professor at Florida State University

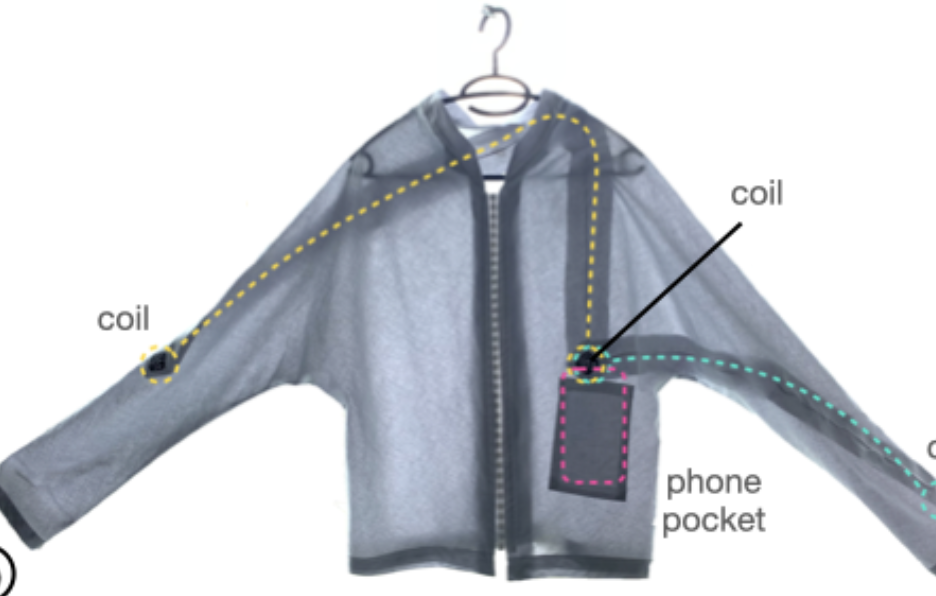

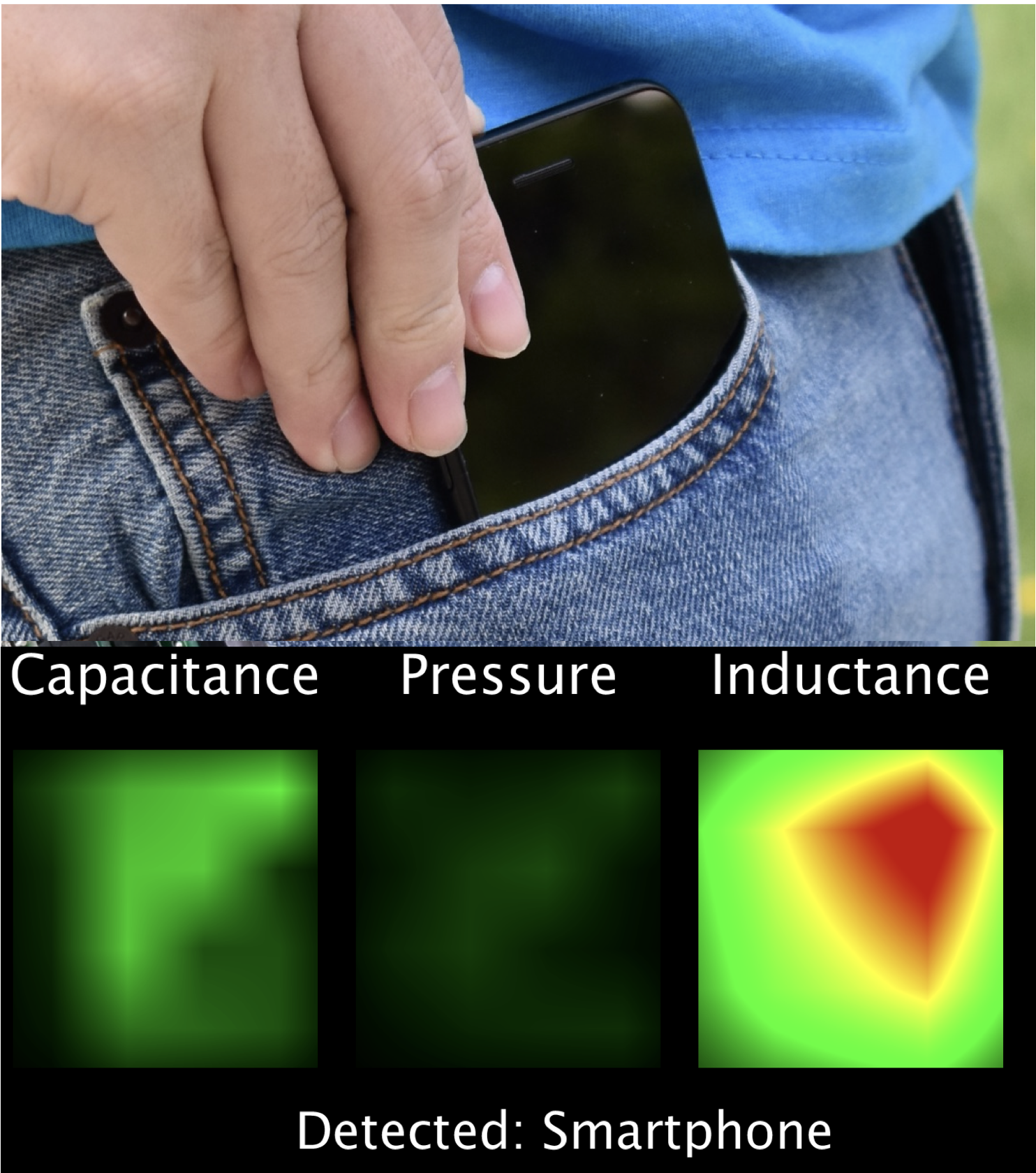

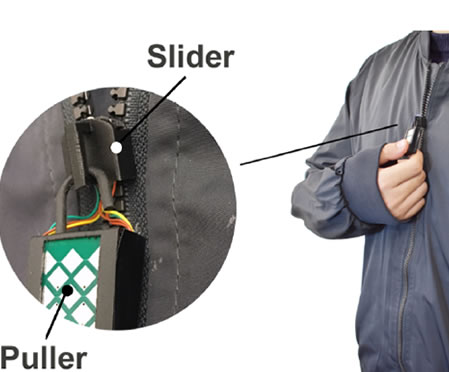

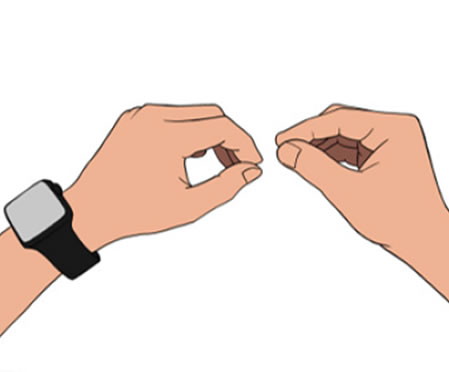

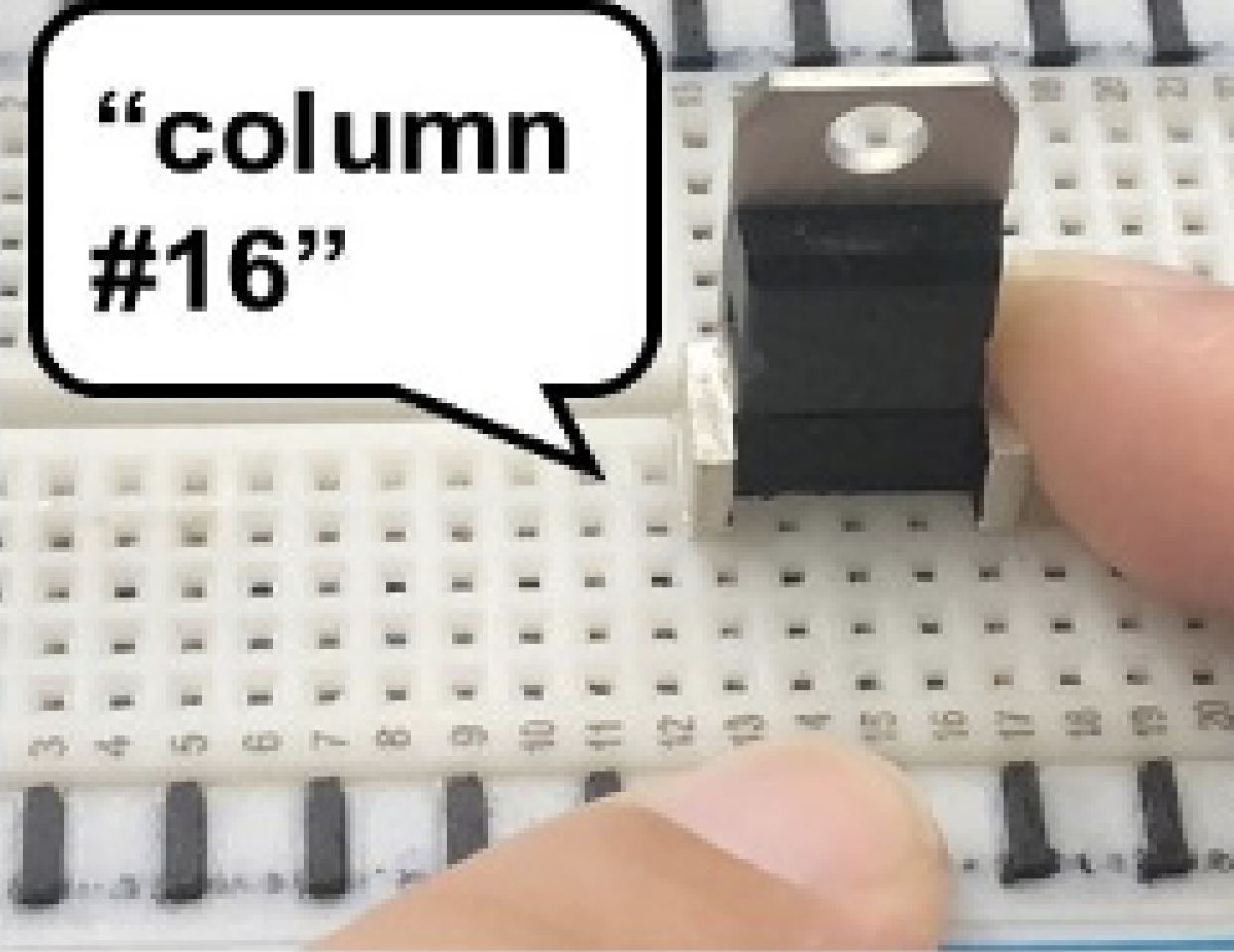

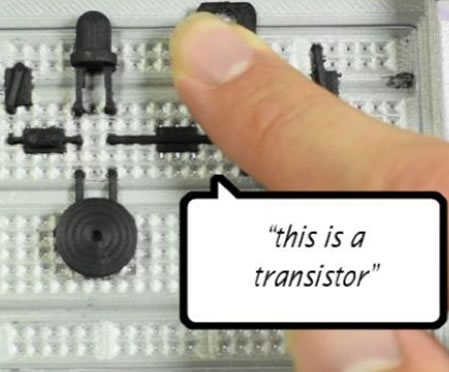

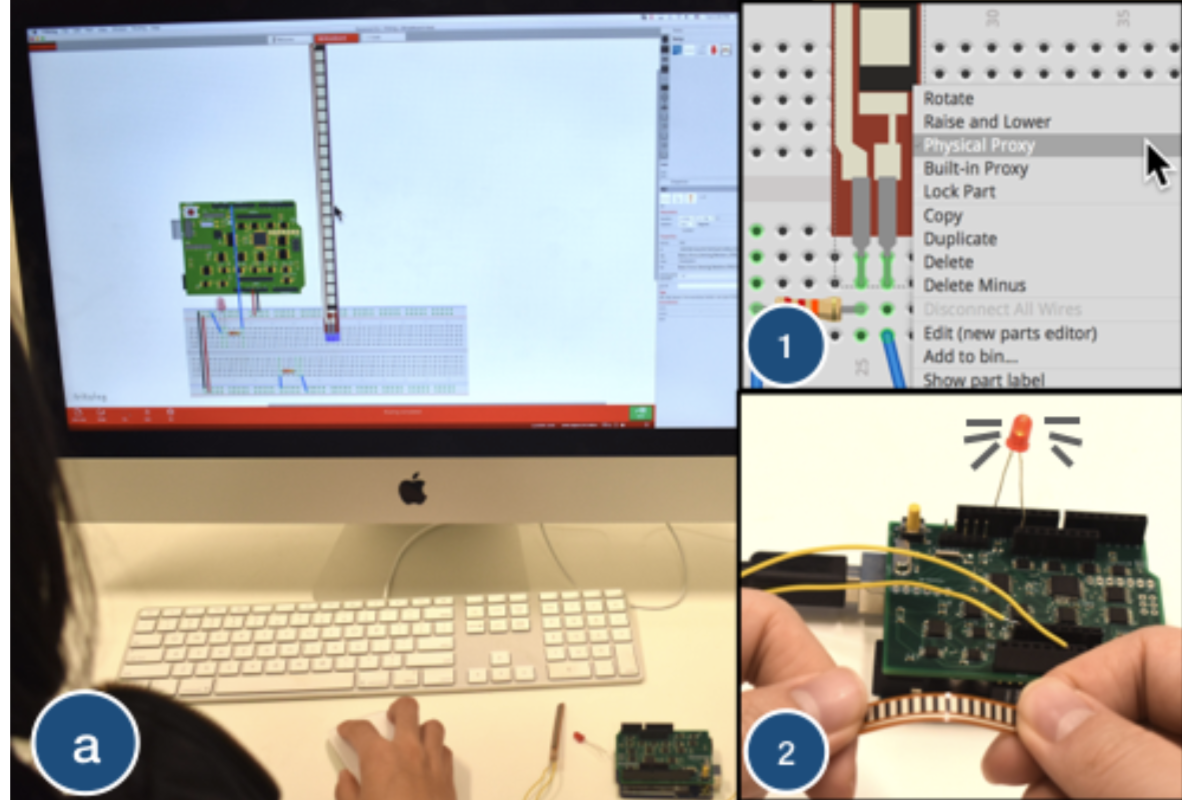

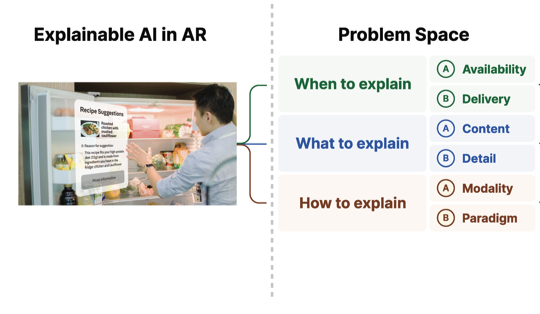

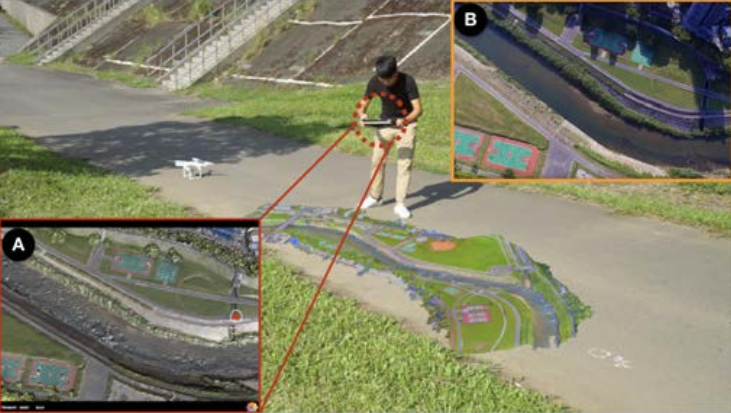

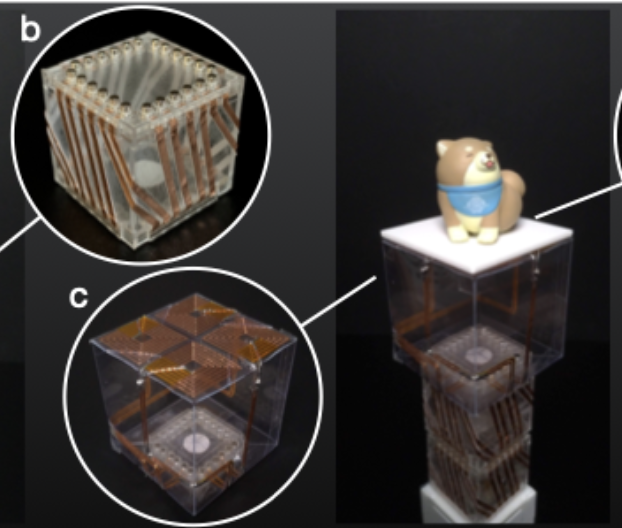

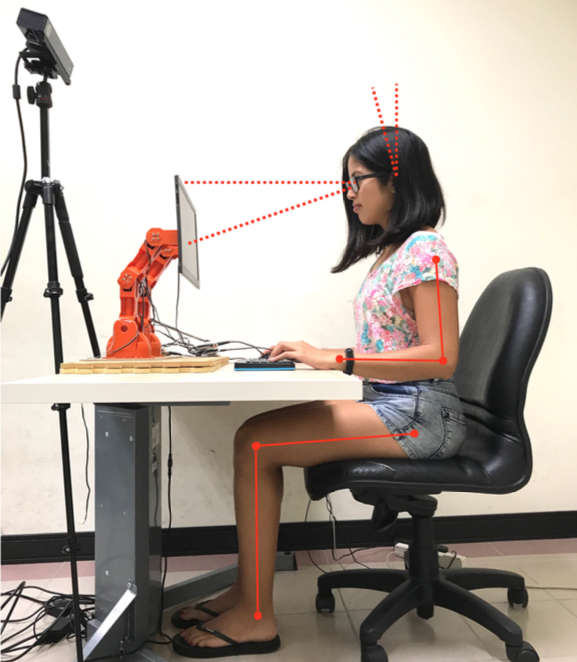

My research lies in the intersection areas of Human-Computer Interaction, Cyber-Physical Systems, and AI Technologies. The objective of my career is to establish the scientific and technical foundations for the material-centric paradigm of Physical AI. My research takes a highly interdisciplinary approach, combining electrical engineering and materials science to design and fabricate low-cost intelligent materials, computer science to develop lightweight operating systems and algorithms, and human-centered design to create tools and evaluate usability and performance in real-world contexts. In addition to it, I have also worked on other topics such as text entry systems and Human-AI interactions in VR/AR. My research is generally published in top HCI venues like CHI and UIST, while I also contribute to top AI conferences such as ICLR. My research has attracted considerable public interests via Internet News (e.g. Engadget, Times). Currently, I direct the MakeX Lab at FSU. I am looking for PhD students who are highly motivated and interested in Human-Computer Interaction research. If you're interested in working with me, please send an email to [tw23l (at) fsu (dot) edu] with your CV and a one-page research statement about the topic you wish to explore.